As an HR professional, you might be daunted by the jargon spouted by some psychometricians. This blog article intends to dispel fear, demystify the jargon, and provide solid information in language that’s as simple as possible.

One of the most basic questions a consumer of assessments (and assessment results) asks is: “Is this assessment working well?” In the Human Resources (HR) context: The key question is: “By using scores from this assessment or interview, are we selecting people who will be able to do the job well?”

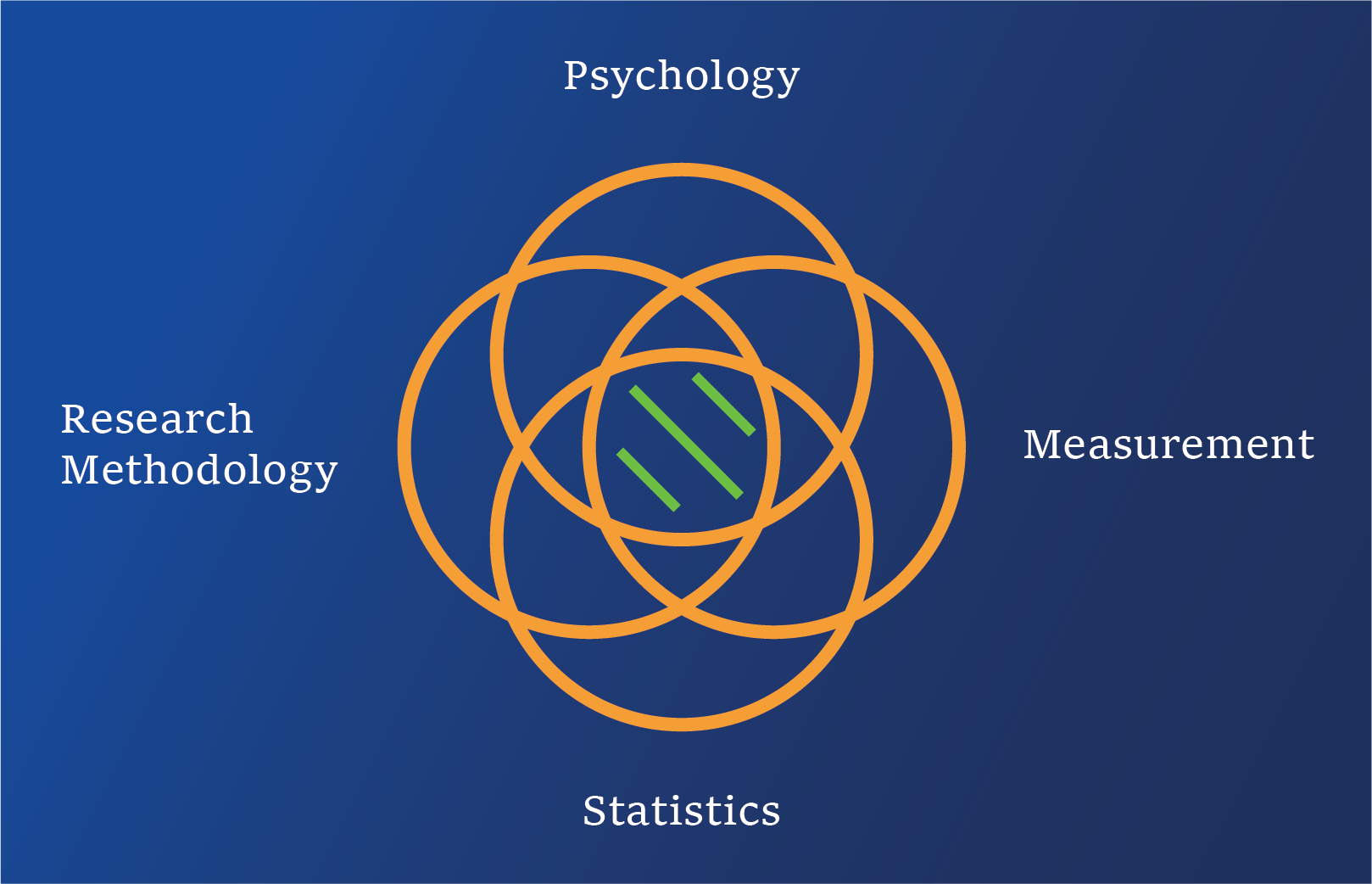

In psychometric jargon, to answer the above questions, we need to evaluate “Assessment Effectiveness.” To do so, we look at the 3 most essential psychometric quantities:

- Validity

- Reliability

- Fairness

Validity

Regarding validity, to start, we need evidence of the assessment’s content validity. To do this your assessment provider provides a document describing how they decided what to measure, how the questions were created and how they established relevance to the target job or job cluster. All worthy assessment providers have such a document already prepared and available for you. If you do not already have it, all you need to do is ask for it.

Then, you need to see a number that indicates the strength of the relationship between the assessment scores and actual job performance for people who were hired using the assessment. In psychometrics jargon, this number is a predictive validity coefficient. To calculate this number, the assessment provider will need real job performance data from people that you actually hired. Make this a priority as it will enable you to answer questions such as:

- Is my “New Hire Quality” improving?

- Do people who score higher on the interview outperform those who score lower?

- Is the assessment empowering me to make more correct selection decisions?

If you do only ONE thing, ensure you get the predictive validity coefficient computed with at least 100 of your own new hires. Do this as soon as possible. Only renew your contract if the predictive validity coefficient, computed using your own data, is suitably high.

Suitably high depends upon the number of people involved in the research study. The predictive validity coefficient can range between -1.00 and +1.00. When, you provide good performance data on 100 or more of your own people, you can expect the coefficient to be positive and in the +.40 to +.60 range. For more detail about setting realistic predictive validity number expectations see articles by Robertson & Smith (2001), and McDaniel and his colleagues (1994). References for both articles are provided at the end of this blog.

Until you have sufficient data from your own people, and BEFORE YOU MAKE A PURCHASE DECISION, request predictive validity coefficients from other clients (names are not needed as proprietary clauses in agreements usually prevent this) where the assessment has been used to select people for jobs with the same or similar responsibilities to your “target” job or job cluster. Get at least 3 predictive validity coefficients each based on at least 100 people, and ensure that these people match the demographics of your job applicants. If the demographics of samples used to calculate the predictive validity coefficients do not match the demographics of your job applicants, then reject the numbers as unsuitable for you to consider when making such an important purchase decision and significant financial investment.

Reliability

Psychometricians can compute any of several reliability coefficients. One of these numbers is an Internal Consistency Reliability coefficient which indicates whether job applicants responded to questions in the interview or assessment in a consistent manner. This consistency measure is sort of like a “lie detector” embedded within the assessment.

The most common Internal Consistency Reliability coefficient is Cronbach’s Coefficient Alpha. This single number is on a scale from 0.00 to 1.00, and numbers above 0.80 are desired although numbers above 0.70 are acceptable. Thus, a Cronbach’s Coefficient Alpha of 0.85 indicates that job applicants responded to questions in the assessment in a consistent manner. Researchers interpret this number as an indicator of whether the set of questions in the assessment were understood and interpreted by most job applicants in the same way.

It is smart to always ask for the reliability number to verify that it is close to or above 0.80. Once assessment scores are available an Internal Consistency Reliability coefficient can be readily computed. So, request it. Your assessment provider should have this number readily available for you based on existing data from the research conducted to develop the assessment. Get Internal Consistency Reliability coefficients from at least 3 relevant samples of people.

Useful guideline: When observing numbers from other organization’s data, ensure that the people in the research samples match the demographics of your job applicants. If the demographics of the samples used to calculate the Internal Consistency Reliability coefficients do not match the demographics your job applicants, then reject the numbers because they are of unsuitable quality for informing your decision.

Other reliability coefficients may require more time and additional data, so be understanding, but feel comfortable always requesting an Internal Consistency Reliability coefficient for the assessment. Again, as soon as possible ask your assessment service provider to calculate the Internal Consistency Reliability coefficient using data from your own job applicants.

Fairness

Fairness is also called equity or the absence of bias. The Equal Employment Opportunity Commission has specific guidelines that assessment developers follow to establish and document that scores from an assessment are fair. We will not delve into these details here. You can assume that the professionals on the assessment developer’s team are conversant with the Uniform Guidelines on Employee Selection Procedures (1978). Simply request the fairness numbers, and graphs if they are available. The graphs will bring the Fairness Indices to life, and help you see how similarly groups (e.g., males and females) who are matched on job performance, score on the assessment. You simply need a document that says: scores from this assessment are fair to females and males, people 40 or older and those under 40, and people from different racial/ethnic classifications. In America, when doing fairness calculations, we often use the following racial/ethnic categories: White; Black or African American; Hispanic or Latino; American Indian or Alaska Native; Asian; and Native Hawaiian or Other Pacific Islander.

Before putting a product on the market, a responsible assessment developer would have completed several studies to establish fairness. An integral part of these studies is flagging individual questions or statements that show statistical bias and repairing or replacing them before doing a new study. By the time the assessment gets to you, its fairness should be well-documented and beyond reproach.

Your professional responsibility is to have the service provider, use your data from your new hires, to calculate fairness statistics for your organization. Failure to do so is to neglect your duty. It is normal to conduct the fairness analyses at the same time the predictive validity study is being attended to.

If you proactively conduct return on investment analyses, it is likely that you already utilize your applicant flow data to calculate metrics such as time to hire, cost per hire, new hire quality and source channel efficiency. At the same time, it is a good idea to use your applicant flow data to ensure that your 4/5ths rule statistics are in compliance with guidelines from the Equal Employment Opportunity Commission (EEOC). If you need help with this, please reach out to the psychometric-solutions.com team.

Data Requirements

- Content Validity – at minimum, requires a job description and job analysis to establish the job relevance of questions used in the assessment.

- Reliability – requires assessment score data. i.e., the scores each person’s response received when he/she responded to each question.

- Predictive Validity – requires job performance and assessment score data.

- Fairness – requires job performance data, assessment score data and demographic data (e.g., gender, age and race category).

This concludes our brief tour of the criteria you need to use when evaluating “Assessment Effectiveness.”

Summary

When evaluating Assessment Effectiveness pay attention to validity, reliability and fairness.

Validity pertains to the accuracy of the decisions you make on the basis of assessment scores. Effective assessments produce scores that empower you to make more correct than incorrect selection decisions. When evaluating an assessment’s content validity, job relevance is essential. Pay significant attention to the predictive validity coefficient computed using your own data. Only renew your contract if the predictive validity coefficient, computed using your own data, is suitably high. Expect numbers in the +.40 to +.60 range.

Reliability is usually well-established and documented. Simply ask for it, as your assessment provider will have it ready and available for you. Prior to your purchase decision, ensure that the Internal Consistency Reliability coefficients provided for similar jobs, from other clients, are consistently above 0.80. Then, ask your assessment provider, how soon they could calculate this coefficient using your own data.

Responsible assessment developers complete several studies to establish fairness before delivering their products to clients. As a responsible client, seeking to maximize the Return on Investment for your company, ask the service provider to use data from your new hires, to calculate fairness statistics for your organization.

We hope you found this blog article helpful. For more information or assistance, please contact us at info@psychometric-solutions.com

Future Blog Article

- The correct data and metrics to use to evaluate each individual’s job performance. What are the keys regarding appropriate and accurate Measurement of an Individual’s Job Performance?

References

Equal Employment Opportunity Commission, the Office of Personnel Management, the Department of Justice, the Department of the Treasury, the Department of Labor’s Office of Federal Contract Compliance, the Civil Service Commission and the Commission on Civil Rights (1978). Uniform Guidelines on Employee Selection Procedures. Washington, DC: Author.

Equal Employment Opportunity Commission, the Office of Personnel Management, the Department of Justice, the Department of the Treasury, the Department of Labor’s Office of Federal Contract Compliance, the Civil Service Commission and the Commission on Civil Rights (1978). Uniform Guidelines on Employee Selection Procedures. Washington, DC: Author.

McDaniel, M. A., Whetzel, D. L., Schmidt, F. L. & Maurer, S. D. (1994). The validity of employment interviews: A comprehensive review and meta-analysis. Journal of Applied Psychology, 79 (4), 599-616.

Robertson, I. T. & Smith, M. (2001). Personnel selection. Journal of Occupational and Organizational Psychology, 74, 441-472.

About the Author

Dr. Dennison Bhola has more than 25 years of experience working in the field of psychometrics creating validated instruments such as assessments, structured interviews and inventories. With his teammates, he has developed more than 1000 assessments for use by organizations around the globe. His expertise includes evaluating new hire quality, assessment effectiveness, source channel efficiency, time to hire, cost per hire, and other such metrics that are of critical importance to Human Resource Professionals. He also specializes in fairness analyses and auditing compliance with Equal Employment Opportunity guidelines, such as the 4/5ths rule.